Emotion Recognition from Audio Spectrograms using CNNs

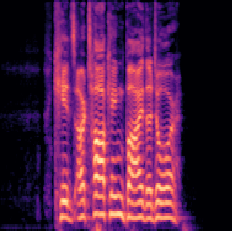

Emotions are an important part of human life, influencing communication, decision-making, and relationships. In this project, we aim to determine whether a model could accurately predict emotions based on spectrograms generated from audio clips. A spectrogram is a visual representation of the frequencies of a sound signal, depicting how the intensity of different frequencies changes over time. The challenge lies in accurately mapping these spectrograms to specific emotional states, which is complex due to the variability in human speech and expression.

This project is motivated by the increasing demand for emotion-aware systems in fields like human-computer interaction, mental health monitoring, and entertainment. These systems could enable more natural interactions with virtual assistants or help therapists assess patients’ emotional well-being.

To address this, we used convolutional neural networks, which are effective at processing image-like data such as spectrograms. Our approach involved converting audio clips into spectrograms and training a CNN to classify them into emotion categories. This method leverages the ability of CNNs to detect patterns in visual data, making it well-suited for emotion recognition from audio.

Example spectrogram of a song clip (angry emotion).